Course notes from Building Multimodal Search and RAG about Multimodal Learning.

- Instructor: Sebastian Witalec, head of developer relations at Weaviate,

- human’s learn multi-modally

- human’s foundational knowledge is built using multi-modal interaction with the world and not via language.

- multi-modal embedding models produce a joint embedding space that understand all your modalities (e.g.: text, video, audio, picture).

- Training:

- start with specialist models

- unify these models

- the vectors that they generate are going to be similar

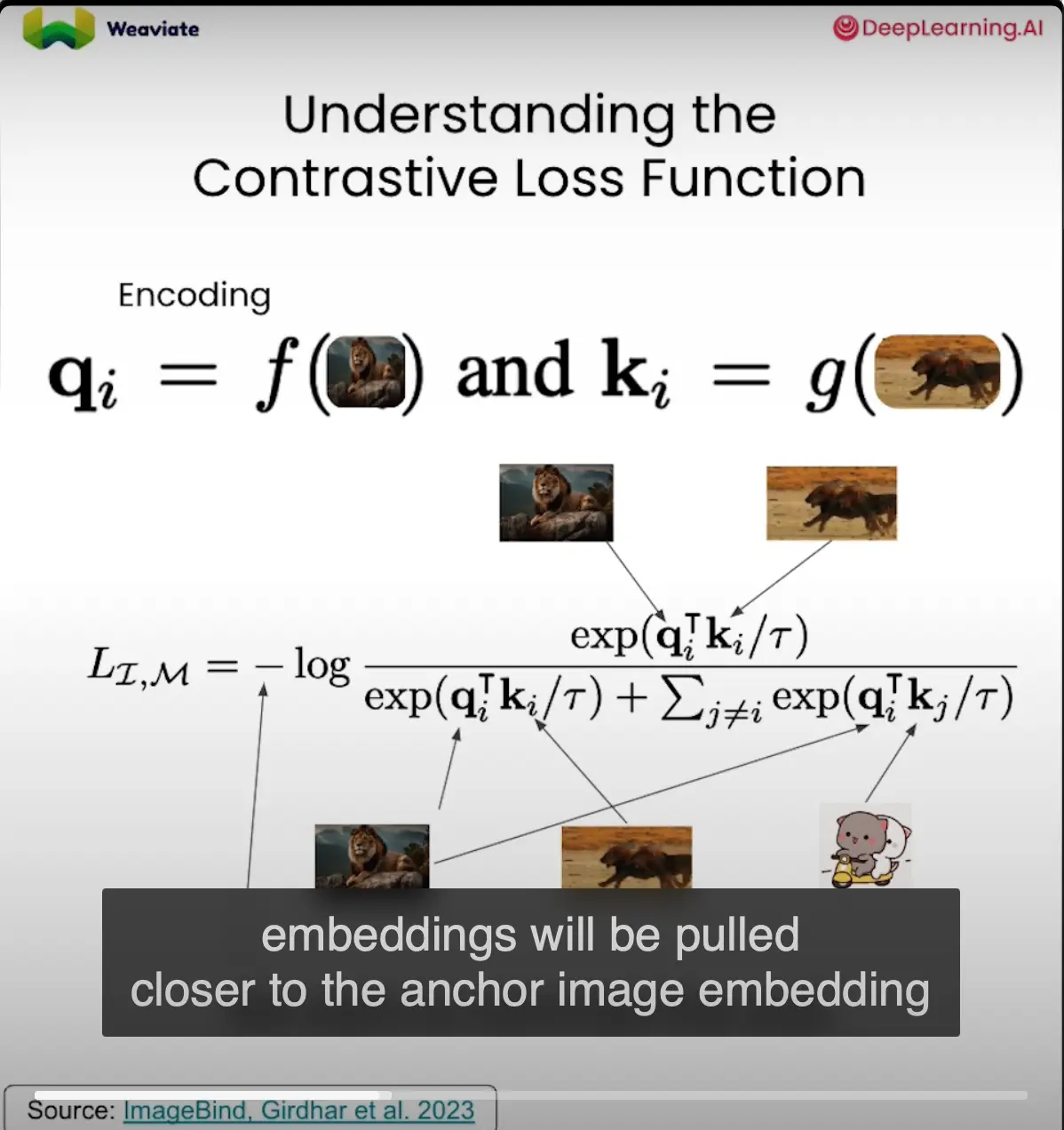

- Models are unified using a process called contrastive representative learning.

- CRL is a general purpose process that can be used to train any embedding model.

- create one unified vector space representation for multiple modalities

- by giving positive and negative examples, we train the models to pull closer vectors together for similar examples. and push away dissimilar examples

- Anchor point

- The pushing pulling process is achieved with the contrastive loss function.

- encode the anchor and the (+ve and -ve ) examples into vector embeddings.

- calculate the distance between the anchor and the examples.

- minimize the distance between the positive example and maximize the negative example during the training process.

- in multimodal learning, the examples can be from different modalities.

- Minimize the loss… (Girdhar et al., 2023)

Girdhar, R., El-Nouby, A., Liu, Z., Singh, M., Alwala, K. V., Joulin, A., & Misra, I. (2023). Imagebind: One Embedding Space to Bind Them All. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 15180–15190. https://arxiv.org/abs/2305.05665